From Sci-Fi to Reality: The Rise of the Humanoid Robot

Scroll social media this week and you’ll see the same jaw-dropping clip: a gleaming humanoid robot calmly loading a dishwasher, folding laundry and answering voice commands as if it has lived in your kitchen for years. The video comes from Figure AI’s third-generation prototype, Figure 3, and it has shattered the “robots are decades away” narrative. A decade ago, the most we expected from a humanoid robot was a cautiously choreographed walk across a research lab. Today, thanks to explosive progress in computer vision, reinforcement learning, and low-cost sensors, a machine can navigate an ordinary home, recognise fragile porcelain and grasp it with surgeon-level precision.

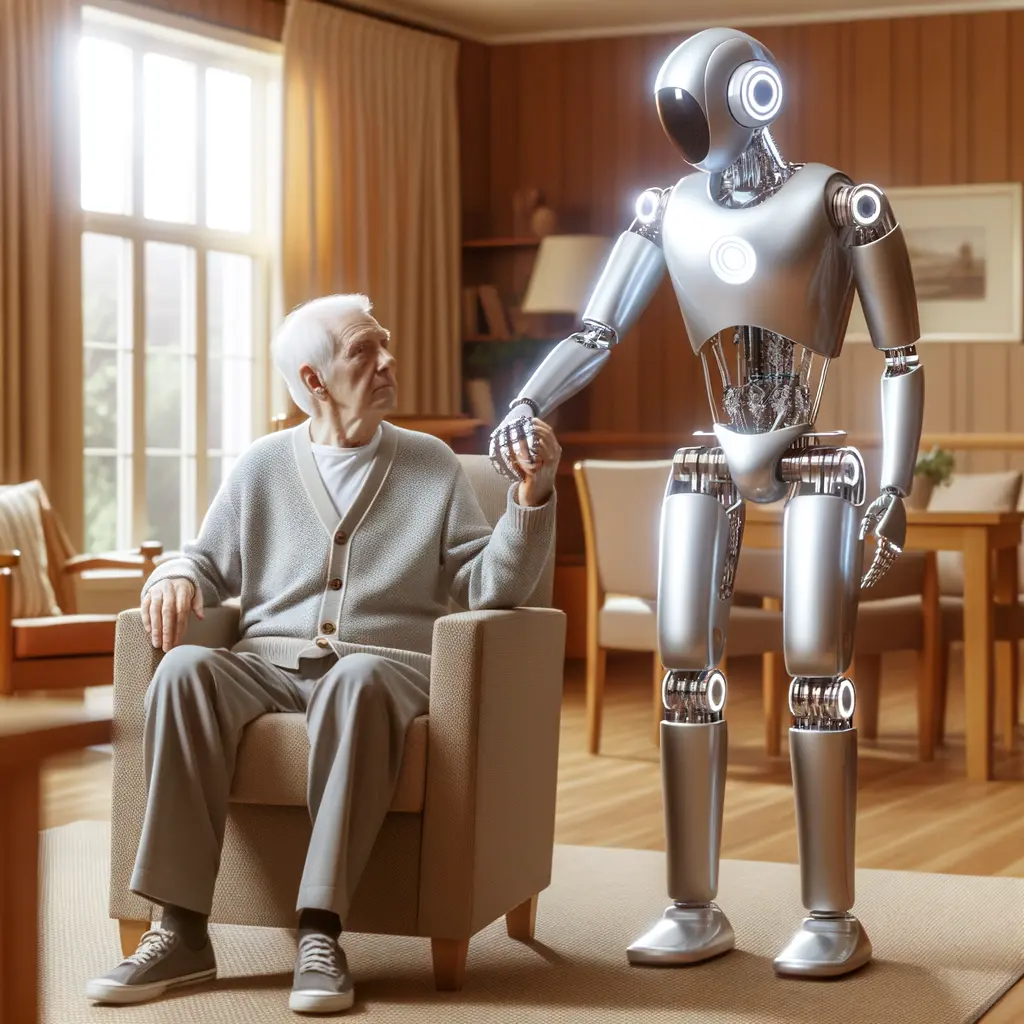

That leap matters because humans built the world for humans—door handles, light switches, supermarket shelves and conveyor belts are all sized for a person. A robot shaped like a person can immediately step into those environments without expensive re-tooling. In other words, the humanoid robot is not a sci-fi novelty; it’s the missing puzzle piece that could unlock trillions of dollars in productivity gains, close labour gaps worsened by ageing populations, and extend independent living for the elderly. Before we dive into the engineering wizardry and industry chess game unfolding between Figure AI and Tesla, let’s unpack how we finally arrived at this viral turning point.

Figure 3 Hardware: Cameras in the Palms & Wireless Charging

While the TikTok clip looks like pure magic, the Figure AI robot named Figure 3’s hardware invites a closer inspection. Start with the hands—the business end of any humanoid robot mission. Instead of relying solely on external cameras, Figure engineers embedded high-resolution Sony IMX sensors directly into each palm. Even when the fingers curl around a mug and obscure the main chest cameras, the robot maintains visual contact with the object, adjusting grip in real time. Layered beneath the skin-like silicone are 120 tactile cells capable of detecting forces as light as three grams—roughly the weight of a sheet of paper. That sensitivity explains why the robot can secure delicate glassware without a cringe-inducing crunch.

Mobility received an equally radical makeover. Figure 3 swaps bulky power cables for inductive charging coils built into its carbon-fibre feet. When battery levels dip below 20 percent, the humanoid robot simply strolls onto a floor pad—no plugs, no downtime. To keep up with human cadence, the company redesigned every actuator, doubling torque density while shaving 15 kilograms off total mass. The result is smoother, faster motion that looks less like robotics class and more like a confident co-worker.

Safety wasn’t sacrificed for speed. Soft textile shields cover pinch-points, proximity sensors halt limbs within 20 milliseconds of detecting a human, and redundant processors cross-check every movement. Together these innovations turn a lab curiosity into hardware ready for a warehouse aisle—or your living room.

An AI Brain for the Body: Training Figure on Massive Data

Great hardware is useless without an equally capable mind. Figure’s leadership understood this early, securing investment from OpenAI and Nvidia so the humanoid robot could learn the same way people do—by watching, trying, failing and refining. The company captured thousands of hours of human kitchen routines using GoPro cameras and annotated the footage with action labels. This data set feeds a multimodal transformer that fuses video, LiDAR, force-sensor readings and language instructions into a single representation. When you say “stack the bowls,” the model doesn’t just translate words into coordinates; it predicts the entire sequence of hand trajectories, force profiles and recovery moves if something slips.

Training runs on clusters of Nvidia H100 GPUs, pumping out policy updates that are continuously distilled into a lighter runtime model deployed on the robot’s on-board Jetson AGX Orin computers. Because bandwidth is precious, Figure uses a reinforcement-learning-from-simulation (RL-Sim) loop: millions of virtual dish-stacking attempts play out overnight in the cloud, and only the most promising policies make it to the physical prototypes. That’s why the motions you saw in the viral video feel almost human—each gesture is the culmination of months of exploration compressed into a 0.02-second control tick.

Crucially, the AI brain is domain-agnostic. The same neural weights that fold laundry can be fine-tuned, via a few hours of demonstrations, to operate a pneumatic drill or unload pallets. This flexibility is what convinces investors that advanced AI robotics will transform multiple trillion-dollar markets.

Tesla Optimus vs Figure 3: Who Wins the Humanoid Robot Race?

Figure 3 may have captured the internet’s imagination, but it is not walking the stage alone. Tesla’s Optimus—a project green-lit by Elon Musk in 2021—targets the same promise with a very different playbook. Where Figure emphasises dexterity and an AI-first approach, Tesla leans on its hard-won mastery of supply-chain optimisation and gigafactory scale. According to Musk, Tesla can leverage existing casting machines, battery lines and Dojo supercomputers to crank out millions of units at a projected price tag of $20,000. If that forecast holds, Optimus production could eclipse the company’s electric-vehicle revenue within a decade.

Spec sheets show key contrasts. Optimus’ joints rely on off-the-shelf harmonic drives, granting 5 kg-cm more torque in the elbows, while Figure 3’s custom actuators win on speed and weight. Tesla houses its compute in the torso, cooled by a compact heat-pipe array similar to the Model Y. Figure distributes compute modules closer to major joints to minimise latency. Both humanoid robot contenders boast redundant depth cameras, tactile skins and self-calibrating joints, yet their go-to-market strategies diverge. Tesla intends to deploy Optimus first in its own factories—label removal, parts kitting, quality inspection—before selling to consumers. Figure is courting third-party pilots in logistics and elder-care facilities to validate general-purpose value.

For enthusiasts, the rivalry is a feature, not a bug. Healthy competition will pressure both firms to reduce cost, improve safety standards, and open developer APIs faster. The real winner is whichever team translates YouTube virality into sustained, affordable production.

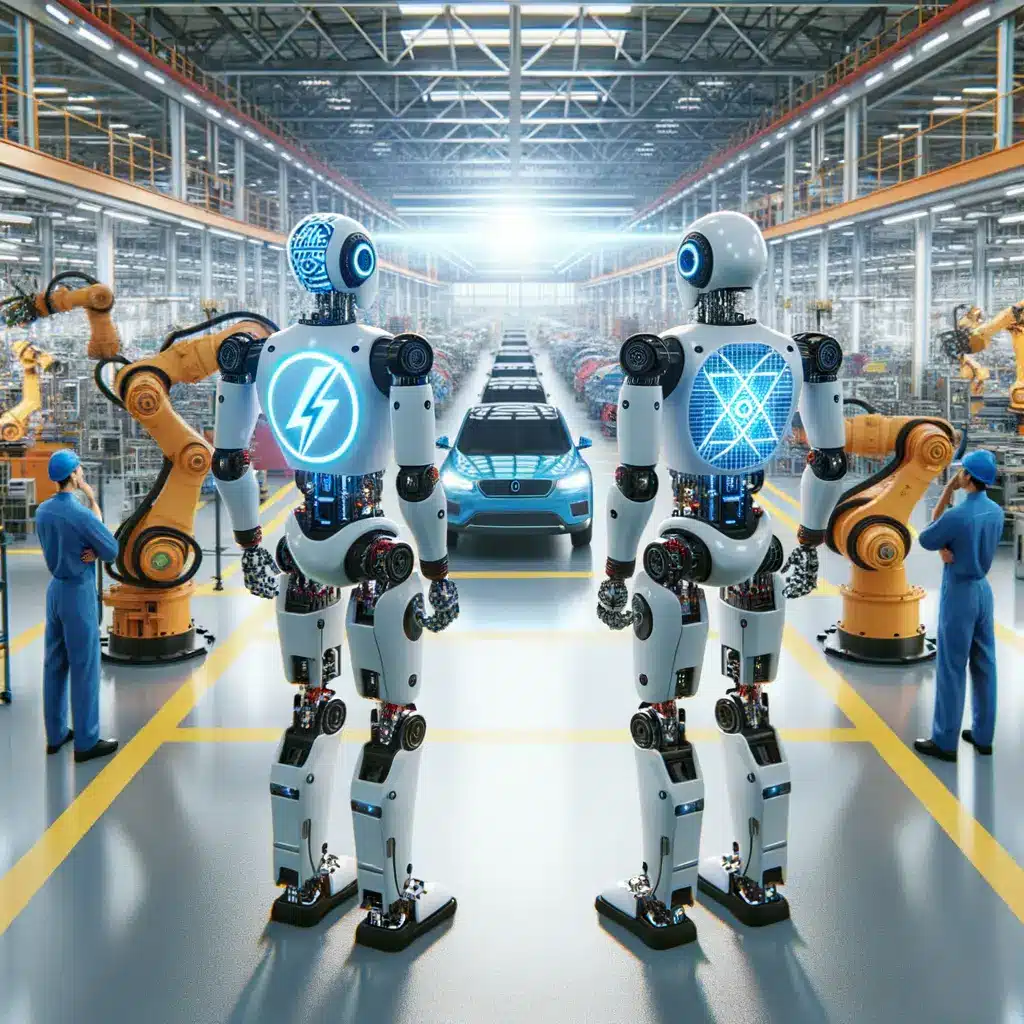

Beyond Chores: Advanced AI Robotics in Industry & Care

Household chores make great demo reels, but the stakes for advanced AI robotics stretch far beyond a spotless sink. The International Federation of Robotics reports that global installations of industrial units crossed 553,000 last year, yet more than 70 percent remain static arms welded to a cell. A mobile humanoid robot eliminates that constraint. Imagine a disaster-relief scenario where smoke and debris render traditional wheeled robots useless; a bipedal machine can climb stairs, open doors and close gas valves with human tools. In elder-care facilities, a single unit could lift patients, fetch medication and detect falls, offsetting chronic nursing shortages projected to hit 13 million by 2030.

Warehousing giants like Amazon already spend billions on autonomous mobile robots, but those systems require conveyor redesigns and QR codes on the floor. Swapping them for a humanoid robot that navigates existing aisles and drives a pallet jack could slash retrofitting costs. Even creative industries will feel the ripple. With dexterous hands and vision, a robot could operate a RED Komodo camera on set, repositioning lights between takes—functions traditionally handled by gaffers and grips.

Of course, the conversation isn’t complete without ethics. Who bears liability if a 90-kilogram robot drops a tray on someone’s foot? How do we protect biometric data collected by embedded cameras? Regulators in the EU are drafting the AI Act, while U.S. agencies explore OSHA adaptations. Companies deploying pilots should consult our in-depth guide to AI compliance and read the recent case study on warehouse safety sensors to get ahead of the curve.

Adapting to the Future of Work Automation Today

The last time technology upended daily life this quickly, the iPhone had just introduced the idea of apps. In 2024, the humanoid robot is poised to become the next platform, capable of running “skills” the way your phone runs software. Whether you are a freelancer, facility manager or policy maker, complacency is not an option. Start by auditing workflows for repetitive physical tasks—bin sorting, equipment cleaning, stock checks—that could be off-loaded to a general-purpose machine within five years. Cross-train employees on systems thinking and exception management so they can supervise fleets instead of performing every motion themselves.

Preparing for the future of work automation requires infrastructure light-touch upgrades: wide Wi-Fi 6E coverage, easily readable QR labels, and cloud connectors that send work orders directly to a humanoid robot’s task queue. Educational institutions can prepare the next generation through interdisciplinary programs that blend mechatronics, ethics and design thinking. Parents might encourage children to explore coding not just on screens but through robotics kits.

Most importantly, keep the conversation human-centred. Automation at this level should elevate well-being, not erode it. By shaping deployment policies today—fair wages for displaced workers, transparent data practices, rigorous safety standards—we ensure the technology serves society. The future has indeed walked into the room, wrapped in washable textiles and ready to collaborate. And if you ever doubt how fast horizons shift, revisit Figure 3’s viral video: a reminder that tomorrow’s breakthroughs are only a firmware update away.